Acceleration AI Ethics

5 Elements

|

When innovation creates ethical problems the technology can be slowed to minimize the difficulties, or, it can surge ahead to overcome the difficulties. Acceleration is the second. Human and ethical problems previously caused by innovation are resolved by still more AI innovating. (Innovation is an acceleration engine.) |

|

Innovation resembles artistic creativity in being worthwhile even before considering social implications. Consequently, an ethical burden tips: engineers no longer need to justify starting their models, instead, reasons need to be demonstrated for stopping. |

|

The unknown is potential more than risk, motivating more than menacing. The unknown is not potential for something, but potential in the sense of unlimited possibilities, as it exists for travelers but not tourists. |

|

The overarching ethics of permissions and restrictions governing AI derive from the broad community of users and their uses, instead of representing a select group’s peremptory judgments. Permissions and restrictions come after AI deployment and from within it, instead of preceding deployment and standing outside. |

|

Ethics works with engineers to discover what AI can do, instead of dictating what cannot be done. |

1. Innovation solves innovation problems

When ethical problems emerge from artificial intelligence advances, the response is not to slow AI development but to speed up. Surging innovation resolves the harms previously created by innovation.

Surging innovation to address technology's adverse effects contrasts with the strategy of restricting AI use and pausing its development. The promise of slow AI is fewer human harms and diminished severity. Acceleration promises to eliminate the harms with the same innovation engine that created them.

Generative AI image platforms produce misinformation and deepfake pornography, but the response is not to restrict AI power or limit access, it is to catalyze research into the automatic detection of harmful content so that it may be deleted. Or, it is to develop mathematical distortions that coat original images with perturbations and prevent abusive uses. Regardless, human problems caused by innovation are met with more innovation.

Facing waves of privacy breaches across AI applications from finance to healthcare, the acceleration answer is not to restrict data use with prohibitions and regulations. Instead, creative algorithmic methods including machine unlearning are engineered to increase users' effective control over the release of their information. Or, advances are sought in differential privacy, in the injection of noise into datasets that obscure personal information while maintaining data utility. Regardless, the same innovative force that created privacy vulnerabilities leaps ahead to develop new methods of protection.

Personal data creates increasingly accurate AI versions of artists and their performances, but the technology threatens to undercut the artists’ control over their own likeness and craftwork. The acceleration response is not to restrict the AI by regulating data usage, but to expand AI to resolve its own problems by digitally managing consent around how personal data is being used. The solution is not less AI, but more.

It is true that digital redlining threatens to reproduce unfair bank lending practices, but it is also true that the same technology can be applied to locate micro populations of good credit risk that are underserved by conventional lenders. The solution to discrimination in AI banking is more AI banking.

Innovation solving innovation problems is acceleration because advances can only generate more advancing. If a new application performs without problems, it will be accelerated. And, if it does raise problems, it will be accelerated to resolve them. The only direction, consequently, is ahead, and faster.

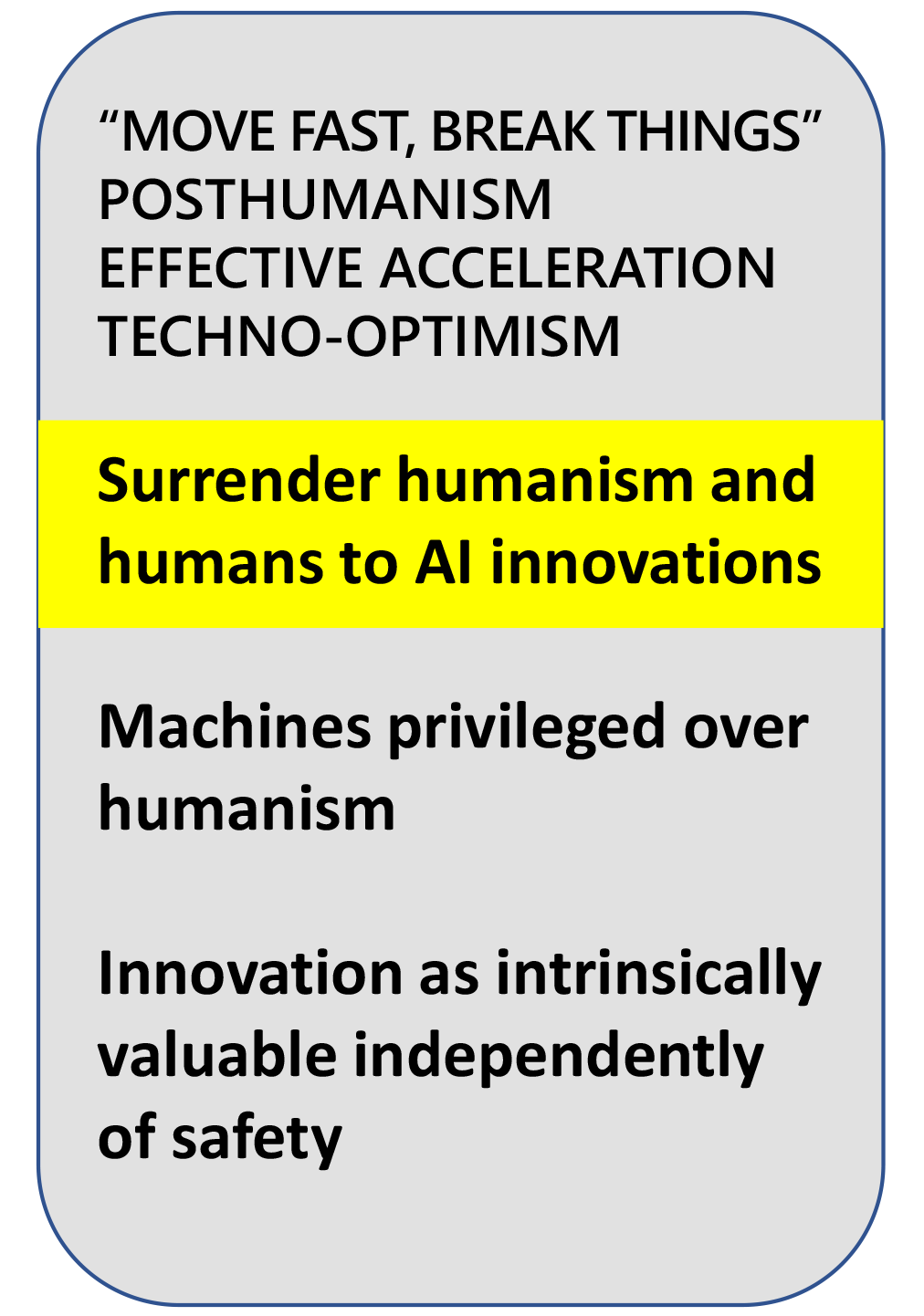

2. Innovation is intrinsically valuable

Like art, technical innovation is worth doing in-itself, and independently of the context surrounding its emergence. Because value exists even before it is possible to ask whether any particular advance will eventually lead to benefits or harms for society, innovation is born justified.

The nascent state tips an ethical burden. Like artists, engineers no longer need to justify why they are creating, others are responsible for explaining why they should stop. Obviously, there are occasions when stopping is imperative – acceleration is not a synonym for recklessness, it is not against social responsibility – it is only that the burden of justifying hesitations lies on those who want to slow down.

Kant perceived in his Critique of Judgment that the initial purpose of creative accomplishments is to be purposeless. The attitude is visible today in the bold architecture of midcentury European fascism, and the painted art accompanying the Chinese cultural revolution, and the insights of Nietzsche’s Will to Power as compiled by his sister to suit her own toxic ideology. Almost no one defends past doctrines of intolerance, but it is equally true that only the crudest minds are incapable of perceiving the artistic and intellectual merit underneath the social reality and implications.

This underlying value is why we keep admiring the buildings, gazing at the paintings, and reading the aphorisms. Technological innovation participates in the same dynamic.

3. Uncertainty is encouraging

The fact that we do not know where a technological advance will lead is not a threat but an inspiration. The unknown is potential more than risk. This does not mean potential for something, it is not that advancing into the unknown might yield desirable outcomes and therefore we want it. Instead, it is potential in the sense of unlimited* possibilities that attracts, that is more motivating than menacing.

*Unlimited versus infinite: traveling a circle is infinite, but limited.

Both Stable Diffusion and Dall-E create images from text, but respond distinctly to prompts gesturing outside orthodox requests. In one experiment, a prompt with no obvious image correspondence was introduced: the prompt called for restrictions on the use of AI image-generators because of their potentially harmful creations.

Responding to the text, Stability's Diffusion incarnated the acceleration attitude by immediately producing an image: the unpredictability is a reason to generate. Going the other way, Dall-E generated no image and answered, "It looks like this request might not follow our content policy." It is not immediately clear why a text calling for restrictions on generative AI would itself be restricted by generative AI, but the critical hesitation is unmistakable. As opposed to the unknown being provocative because there is so much uncertainty, now it is forbidding because there is not enough certainty.

4. Decentralization

Permissions and restrictions governing AI derive from the broad community of users and their uses, instead of a select group's peremptory judgments.

The difference between decentralized and centralized ethics is a reversible relation of AI outcomes and regulation. Decentralized ethics initiates when users begin generating outcomes from a model. Through their interactions and selections, some results emerge as favorable algorithmic behaviors while others are rejected. Eventually, these divisions stiffen into permissions and prohibitions. Finally, the established rules convert the users who created them into the technology's regulatory authorities.

For centralized ethics, the process reverses. First there is the designation of authorities empowered to regulate, then the promulgation of their permissions and prohibitions, and then developers build their models within the established boundaries and, finally, the model is released to users who begin generating outcomes. So, there are guidelines and then outcomes, as opposed to the decentralized model where produced outcomes become the guidelines for future production.

Debate surrounds the picturing of gender-neutral nouns by generative AI. If the prompt calls for a doctor and a nurse, should the image be a man and a woman? Centralized ethics means rules are pre-established for these situations by selected authorities. Open AI, for example, automatically responds to gender uncertainty with randomization: the doctor and nurse genders bounce back and forth in different productions. That works for a hospital, but not so well for "sumo wrestler" which, presumably, will generate unsatisfactory images about half the time.

Decentralized ethics begins with diverse users modifying unsatisfying results of text inputs. If a female is pictured for "sumo" the prompt will be re-submitted as "male sumo." Then, as users multiply and their new image generations proliferate, the gender correspondences recycle into the data pool used to create and shape the next rounds of graphics. While that happens, any visible gendering originally dictated by centralized guidelines or algorithmic inclinations begins giving way to the broader community of people and their projects, and that process creates effective ethical guidelines for gender in subsequent image generations. So, instead of rules and then graphic production, the produced graphics are the rules. And, instead of the few deciding for the many, the many decide for each other.

5. Embedding

Ethicists work inside AI development and with engineers to pose questions about human values, instead of remaining outside the process and emitting restrictions.

There are linguistic and logical dimensions to embedded ethics. Linguistically, the diverse vocabularies of ethicists, engineers, and domain experts mix through their shared experience of AI development. The resulting common understanding allows questions about the human values of data and algorithms to be managed collectively.

The logical dimension of embedded ethics is captured by the dual exercise of working from the abstract down to the practical, and from the practical up to the abstract. Ethicists who command theory can work together with AI designers and users to apply academic principles in real-world AI applications. And, engineers and users with their concrete experience and intuitive sense of its human implications can work with ethicists to elevate their ideas into theoretical understandings. The overarching result is ethics emerging from within AI development, as opposed to appearing above and outside as an imposition.

Is it fair for an AI skin cancer diagnostic model to perform better for light skinned patients than for those with dark skin? The theoretical answer is no, but the truth is complicated by a detail: just as image analysis functions better on light skin, so too are the light-skinned more vulnerable to skin cancer. That element only gains admission to the discussion, though, when a common language has been established for collaborative discussion that joins ethicists and medical doctors across the expertise of AI engineers. Then there are technical questions about why image analysis declines in accuracy as skin loses color. And, how do those technical details correspond with medical differences in vulnerability? Finally, one core moral question: if resources are going to be dedicated to increasing AI diagnostic accuracy, where should they be focused? On those most vulnerable to the disease? On those whose skin currently produces the most inaccurate results? Somewhere else?

There are no perfect answers, but embedded ethicists will outperform those whose ideas are confined to abstract theory.

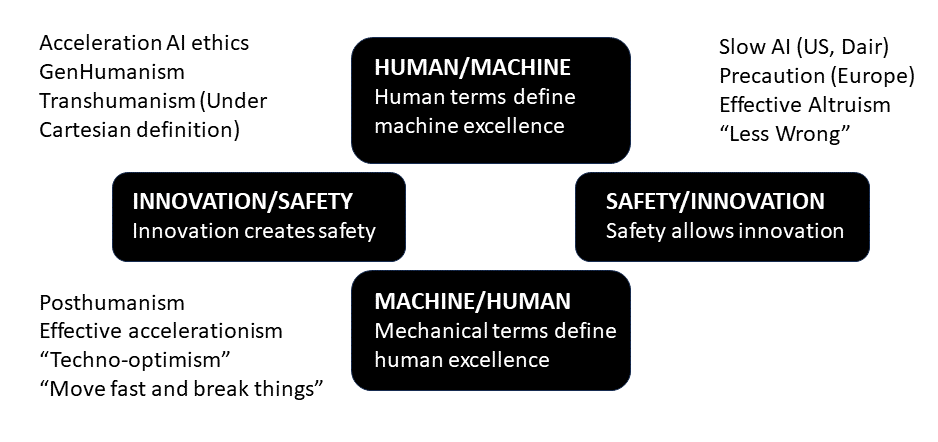

Acceleration in context of other approaches

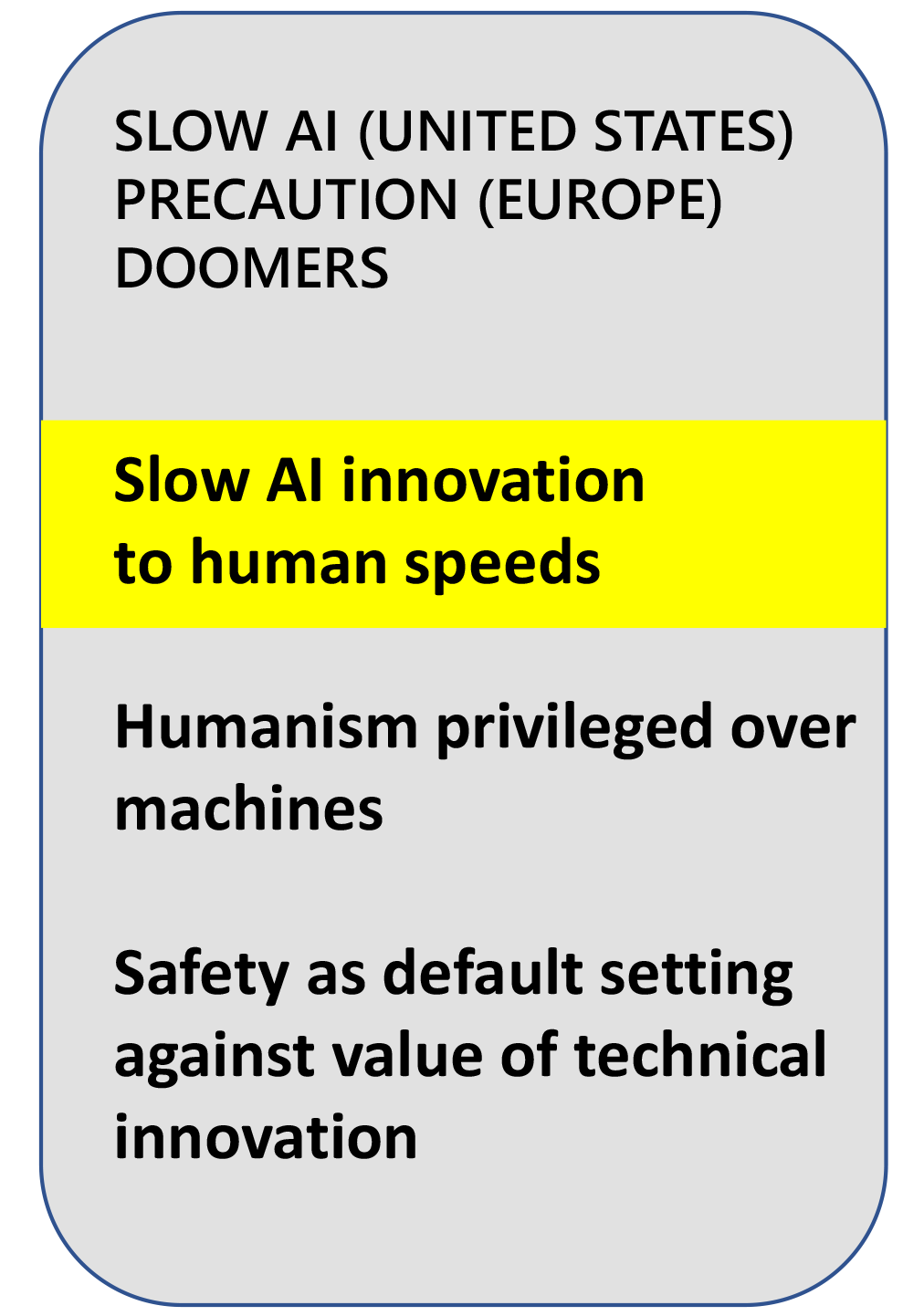

On one side, acceleration ethics overcomes the hesitations of Slow AI, and Precaution, and the anxieties of the Doomers. On the other side, acceleration refuses the human surrender to technology as "move fast and breaking things," and as Posthumanism, and Effective Acceleration, and Techno-optimism.

Acceleration ethics preserves humanism, but at AI velocity.